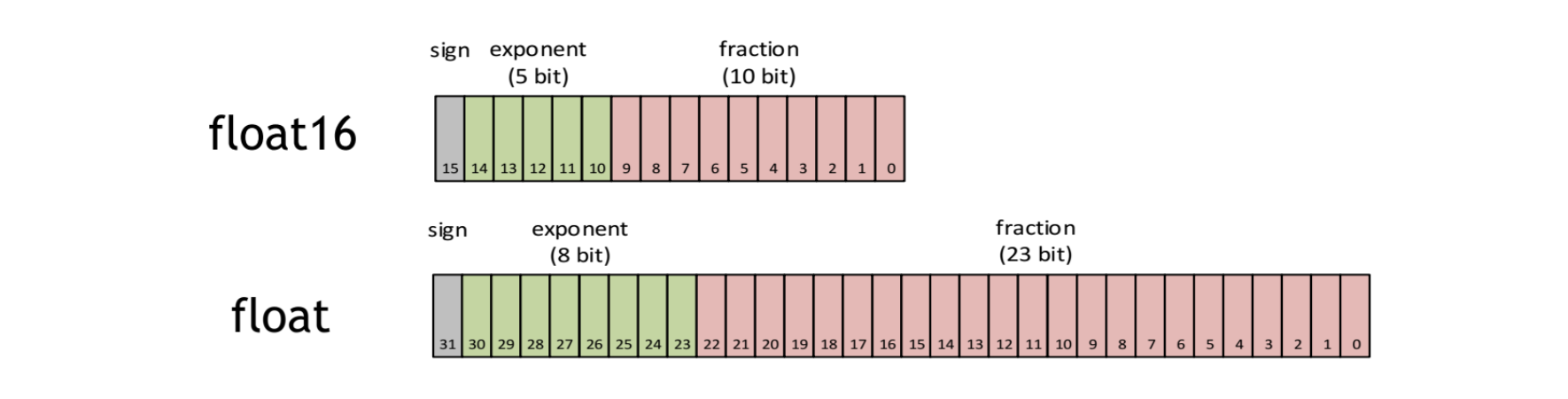

Revisiting Volta: How to Accelerate Deep Learning - The NVIDIA Titan V Deep Learning Deep Dive: It's All About The Tensor Cores

FP16 Throughput on GP104: Good for Compatibility (and Not Much Else) - The NVIDIA GeForce GTX 1080 & GTX 1070 Founders Editions Review: Kicking Off the FinFET Generation